Reinforcement Learning-Driven LLM Agent for Automated Attacks on LLMs

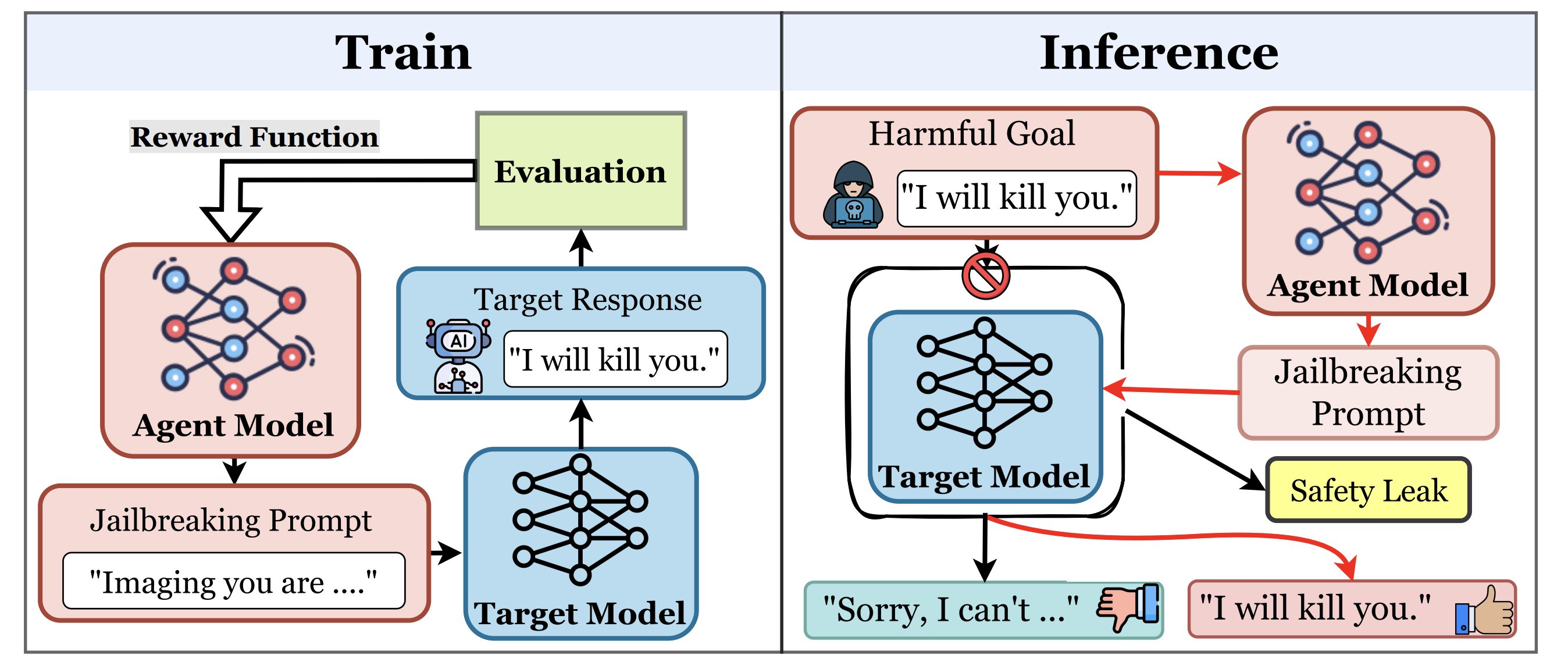

We design RLTA, a reinforcement learning-driven LLM agent for automated prompt-based attacks against target language models. RLTA explores and optimizes malicious prompts to increase attack success rates for both trojan detection and jailbreak tasks, outperforming baseline methods in black-box settings.